Abstract

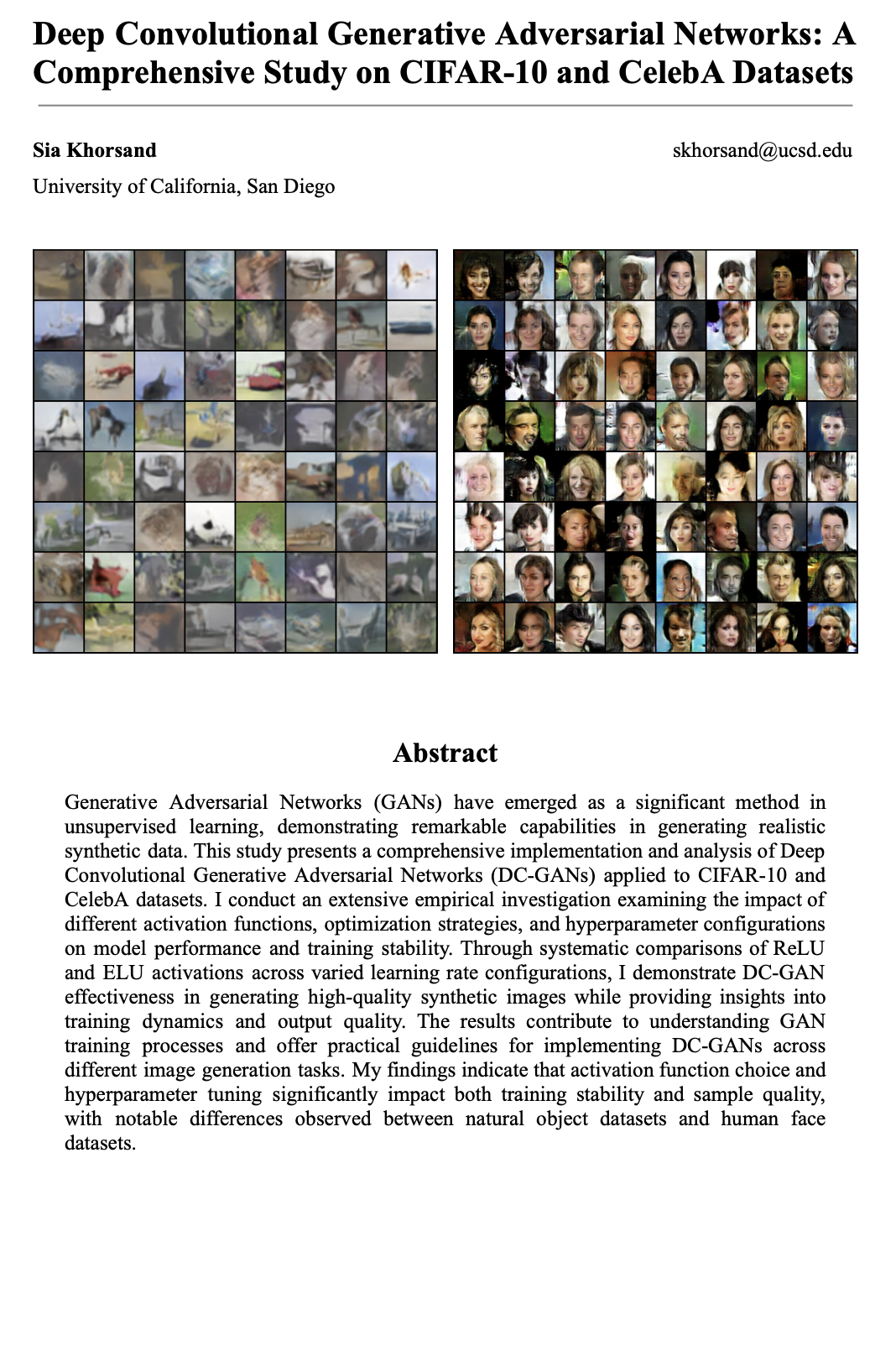

Generative Adversarial Networks (GANs) have emerged as a significant method in unsupervised learning, demonstrating remarkable capabilities in generating realistic synthetic data. This study presents a comprehensive implementation and analysis of Deep Convolutional Generative Adversarial Networks (DC-GANs) applied to CIFAR-10 and CelebA datasets.

I conduct an extensive empirical investigation examining the impact of different activation functions, optimization strategies, and hyper-parameter configurations on model performance and training stability. Through systematic comparisons of ReLU and ELU activations across varied learning-rate configurations, I demonstrate DC-GAN effectiveness in generating high-quality synthetic images while providing insights into training dynamics and output quality.

The results contribute to understanding GAN training processes and offer practical guidelines for implementing DC-GANs across different image-generation tasks. My findings indicate that activation-function choice and hyper-parameter tuning significantly impact both training stability and sample quality, with notable differences observed between natural-object datasets and human-face datasets.

1 Introduction

The field of generative modeling has experienced huge advancement with the introduction of Generative Adversarial Networks by Goodfellow et al. in 2014. These networks have revolutionized the approach to unsupervised learning by introducing a novel adversarial training paradigm that pits two neural networks against each other in a minimax game.

The generator network learns to create realistic data samples from random noise with the goal of fooling the discriminator, while the discriminator network learns to distinguish between real and generated samples. This adversarial process drives both networks to improve iteratively, resulting in generators capable of producing highly realistic synthetic data that can successfully deceive even well-trained discriminators. In short, GANs are basically arm-wrestling matches between two competing neural networks.

The evolution from basic GANs to Deep Convolutional GANs (DC-GANs) was a crucial advancement in the field, addressing many of the training instabilities and mode-collapse issues that plagued early implementations. DC-GANs introduced architecture that significantly improved training stability and output quality, making them particularly effective for image-generation tasks.

Despite these advancements, training GANs remains a challenging task characterized by delicate balance requirements between generator and discriminator performance. The sensitivity to hyper-parameter choices, architectural decisions, and optimization strategies necessitates comprehensive empirical investigation to understand optimal configurations for different datasets and applications.

Research Objectives

2 Methodology

2.1 Architecture Design

My DC-GAN implementation loosely follows the architectural guidelines established by Radford et al., with systematic variations to explore the impact of different design choices. The architecture consists of two competing networks working in an adversarial framework.

Generator Network

Employs a series of transposed-convolution layers to progressively up-sample random-noise vectors into full-resolution images, beginning with a dense layer that reshapes the noise into a small spatial feature map.

Discriminator Network

Progressively down-samples input images to a binary classification, using convolutions and LeakyReLU activations with spectral normalization for CIFAR-10.

2.2 Training Strategy

The training process implements the standard GAN minimax objective, updating discriminator and generator in alternating steps. Multiple stabilization techniques were employed to ensure robust training.

Stabilization Techniques

Learning Rate Schedules

G_LR = D_LR = 1 × 10⁻⁴

G_LR = 5 × 10⁻⁵, D_LR = 1 × 10⁻⁴

G_LR = 2 × 10⁻⁴, D_LR = 3 × 10⁻⁴

2.3 Experimental Design

For each activation × learning-rate setting I train three seeds, log losses, checkpoint every five epochs, and compute Inception Score (50k samples, 10 splits). CIFAR-10 runs for 100 epochs; CelebA converges by epoch 25.

Datasets

Two fundamentally different image datasets were selected to evaluate DC-GAN performance across varied domains: CIFAR-10 for diverse object categories and CelebA for high-fidelity human faces.

CIFAR-10

Contains 60,000 32×32 colour images across ten classes: airplanes, automobiles, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

CelebA

Comprises >200,000 aligned celebrity faces annotated with 40 binary attributes. I use ≈50,000 high-quality images, centre-cropped and resized to 64×64.

Preprocessing Pipeline

• Pixel normalization [-1, 1]

• Random horizontal flips

• Small rotation augmentation

• Quality filtering

• Centre crop faces

• Resize to 64×64

• Pixel normalization [-1, 1]

4 CIFAR-10 Results & Discussion

4.1 Training Dynamics Analysis

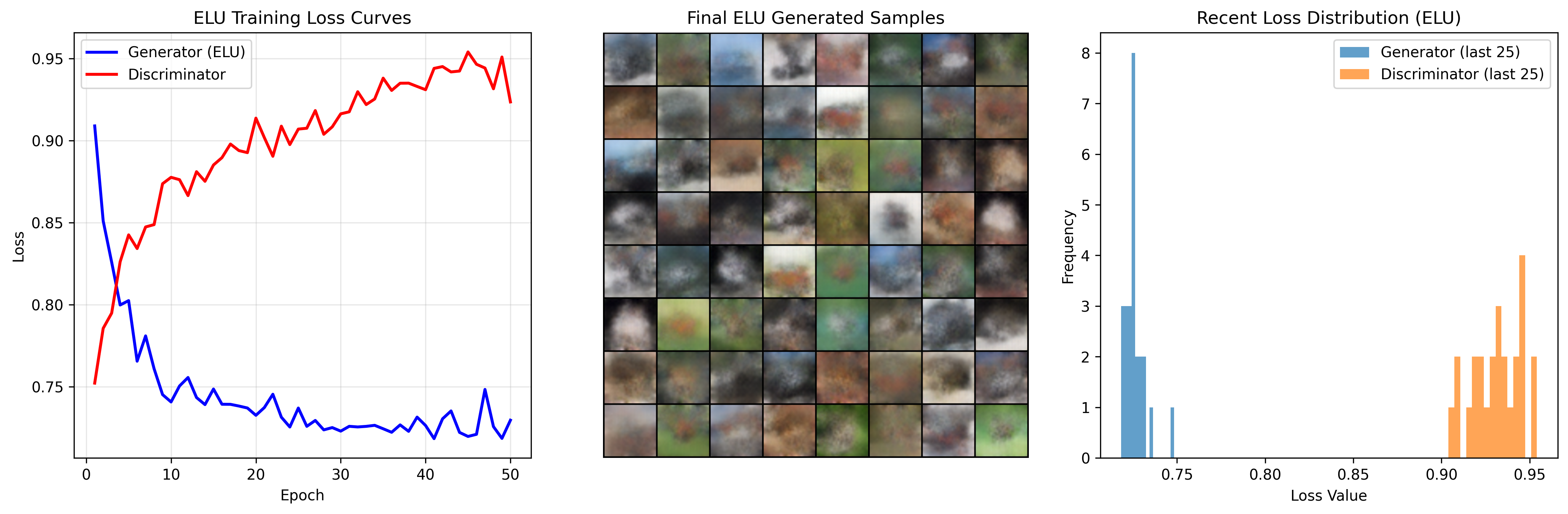

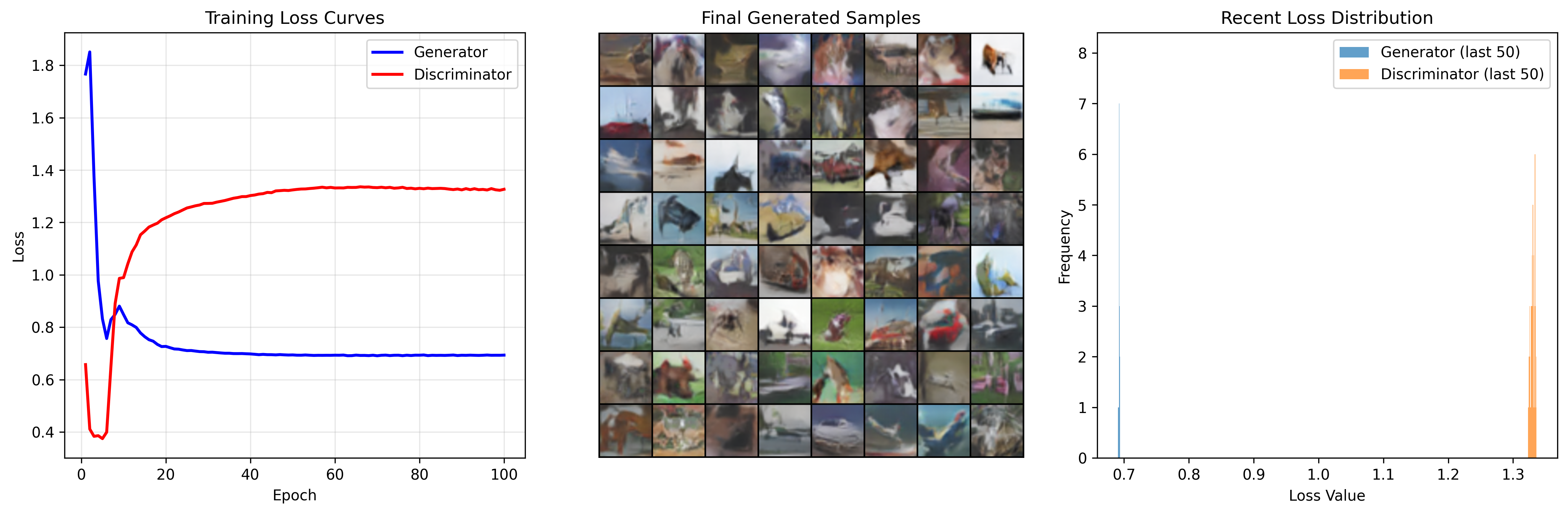

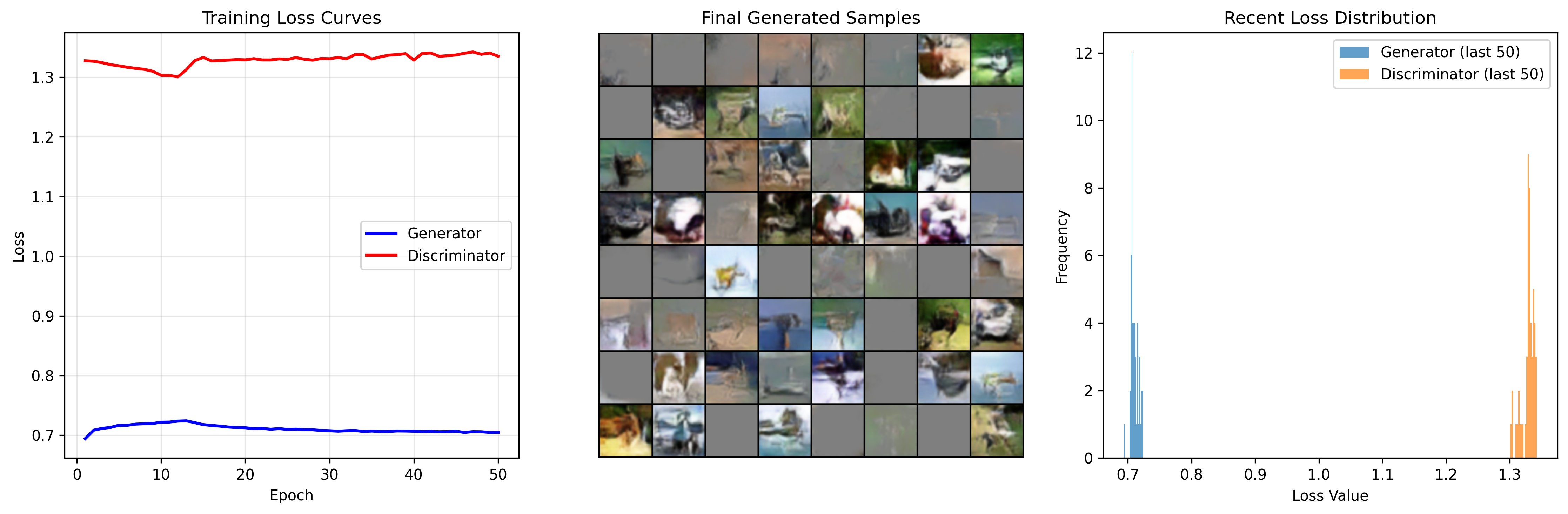

The CIFAR-10 experiments revealed significant differences in training stability and output quality between activation functions and learning rate configurations. Three key scenarios emerged from the systematic evaluation.

ReLU Activation

ReLU's sparse activations preserve high-frequency detail essential for diverse object generation. Performs best with asymmetric learning rates.

ELU Activation

ELU's smooth negative region led to oversmoothing on CIFAR-10's diverse textures, resulting in mode collapse and poor sample quality.

CIFAR-10 Training Results

Key Training Scenarios

Best: ReLU + Asymmetric LR

G: 1e-4, D: 2e-4 → Healthy dynamics, vivid objects, IS 5.49

Worst: ELU + Balanced LR

Diverging losses, mode collapse, blurry blobs, IS 2.87

Problematic: ReLU + Balanced LR

Flat losses, generator complacency, uniform grey patches

CIFAR-10 Performance

Optimal Configuration:

ReLU + Asymmetric Learning Rate

Key Insights

4.2 Architecture Impact Assessment

ELU's smooth negative region oversmooths outputs on CIFAR-10, while ReLU's sparse activations preserve high-frequency detail. However, activation alone is insufficient—ReLU needs an asymmetric LR (higher D) to excel on heterogeneous objects.

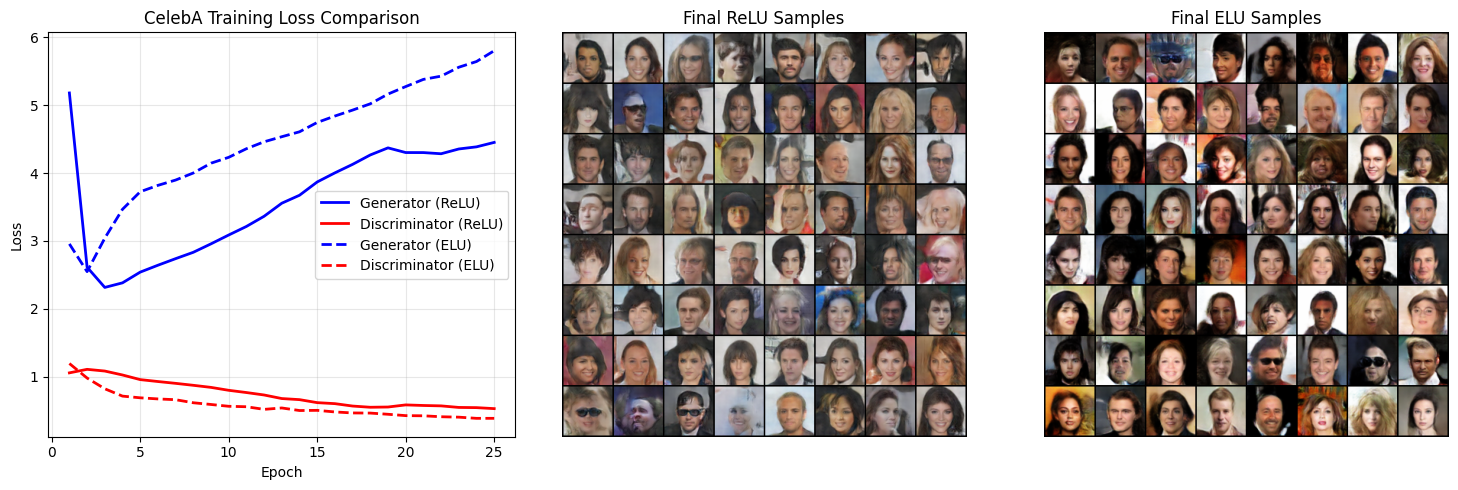

5 CelebA Results & Discussion

5.1 Architecture Impact Assessment

CelebA's facial geometry stabilizes training for both activations, but distinct differences emerge in output quality and fine-grained detail preservation. The structured nature of faces allows both ReLU and ELU to achieve reasonable stability, highlighting the importance of activation choice for detail rendering.

CelebA Training Results Comparison

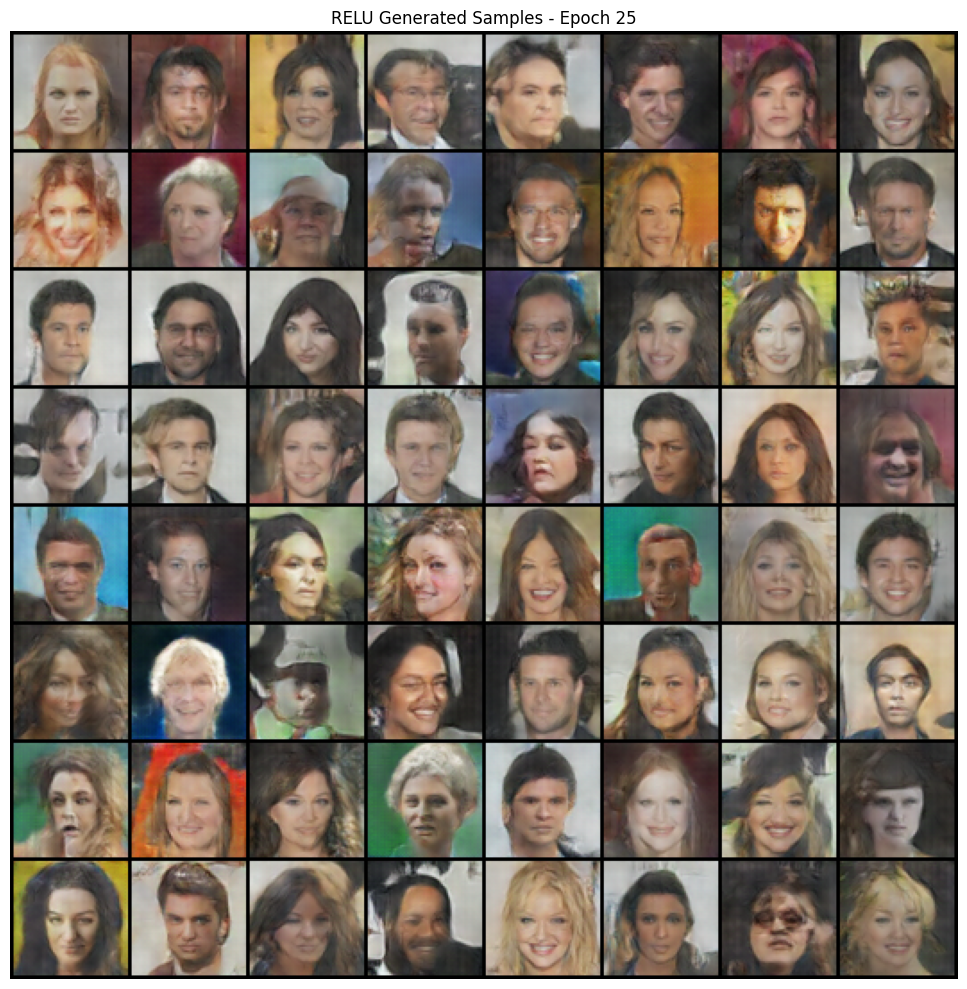

ReLU: Photorealistic Detail

ReLU better preserves hair strands and skin pores, generating photorealistic faces with sharp detail and accurate anatomy across diverse demographics.

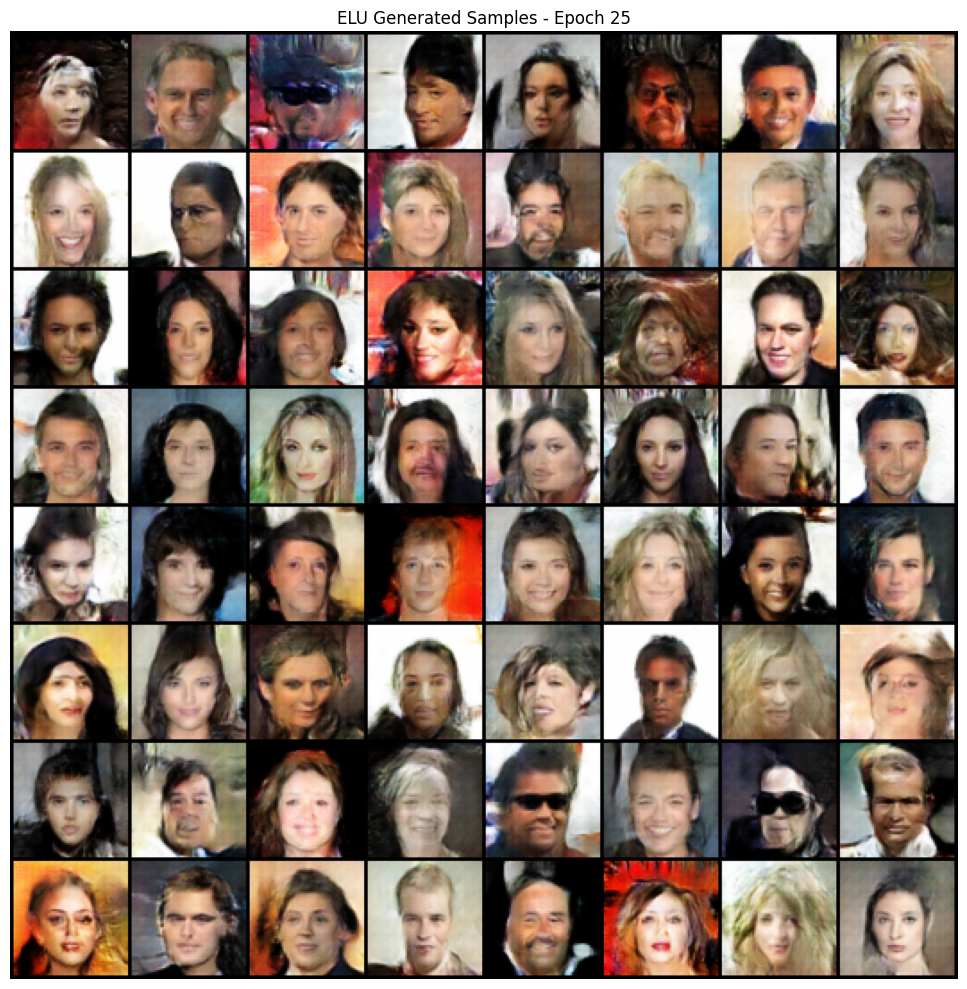

ELU: Airbrushed Softness

ELU yields softer, airbrushed faces with less texture detail. While aesthetically pleasing, lacks the fine-grained realism achieved by ReLU.

5.2 Hyperparameter Optimization

Unlike CIFAR-10, CelebA proved more robust to learning rate variations. The structured domain of human faces allows for more balanced training dynamics, though ReLU still demonstrated clear superiority.

CelebA Performance

Optimal Configuration:

ReLU + Face-tuned LR

(G: 2e-4, D: 3e-4)

CelebA Insights

5.3 Sample Quality Evaluation

Quality Comparison Summary

• Photorealistic skin texture

• Sharp hair definition

• Detailed facial features

• Natural lighting effects

• Smooth, airbrushed skin

• Softer hair rendering

• Less textural detail

• Pleasant but less realistic

ReLU renders high-frequency detail (skin, hair) convincingly, while ELU yields softer features and lower Inception Scores, confirming ReLU's superiority for high-fidelity faces. The reproducibility across different seeds demonstrates the stability of the optimal configuration.

6 Analysis & Conclusion

6.1 Comparative Analysis

CIFAR-10 requires aggressive discriminator learning (2 × G) plus ReLU to conquer class diversity; CelebA is learning-rate robust but still favours ReLU for sharp detail. The fundamental difference lies in dataset complexity and domain structure.

CIFAR-10 Requirements

CelebA Characteristics

6.2 Training Insights

Early Warning Signs

Discriminator loss > 1.5 and generator loss pinned at ≈ 0.7 within 20 epochs signal collapse. These indicators proved consistent across all failed experiments.

Activation choice was the dominant stability factor; learning rate asymmetry mattered chiefly for CIFAR-10. The importance of monitoring training dynamics early cannot be overstated—most failure modes manifest within the first 20 epochs.

6.3 Practical Implementation Guidelines

Recommended Best Practices

General Guidelines

Diverse Datasets (CIFAR-10-like)

Structured Datasets (Faces)

Key Findings

Activation-function choice significantly impacts DC-GAN performance: ReLU consistently outperforms ELU across object and face domains. Optimal configurations achieved IS 5.49 ± 1.8 (CIFAR-10) and IS 6.82 ± 1.4 (CelebA).

By a narrow margin, ReLU demonstrated the most consistent performance across all datasets, showing the best balance between training stability and output quality. The choice of activation function proved more critical than learning rate optimization, particularly for structured domains like human faces.

Conclusion & Future Work

This study demonstrates that while specific architectural choices matter significantly, the optimal configuration depends heavily on dataset characteristics and practical constraints. For image generation tasks, ReLU activation functions provide superior performance when properly tuned with appropriate learning rate schedules.

The most important takeaway is that thorough hyperparameter tuning and systematic evaluation are often more critical than complex architectural modifications. Both CIFAR-10 and CelebA achieved high-quality results when optimal configurations were identified through careful experimentation.

Future Research Directions

Future work will extend these experiments to Progressive-GAN and StyleGAN architectures and explore transfer-learning strategies to build more generalizable generative models capable of producing high-quality synthetic data across diverse domains.